"Service Pauses on Release" is a thing of the past--Non-Stop "Deploy Strategies" by Argo Rollouts

Now that "zero downtime" is the norm, do you have a "deployment strategy" in place?

In recent years, cloud technology has significantly improved application performance. With this increase in traffic, even a small amount of downtime can result in the loss of significant business opportunities and enormous losses.

Against this backdrop, innovations to minimize downtime during application upgrade releases are evolving.

It is now a matter of course to upgrade an application without stopping it, and if a defect is found after upgrading, a mechanism to promptly roll back to the old version is also required.

The method of releasing or rolling back an application with the least amount of downtime is called a "deployment strategy.

When using Kubernetes, Argo Rollouts makes it easy to implement advanced deployment strategies such as Blue-green deployment, Canary release, and even "progressive delivery".

This paper introduces Argo Rollouts and the deployment strategies supported by Argo Rollouts.

What is Argo Rollouts?

Argo Rollouts is a tool that supports deployment strategies such as Blue-green deployment and Canary release in Kubernetes. Argo Rollouts allows you to implement more advanced deployment strategies.

If you know the deployment resource of Kubernetes, you can use the Argo Rollouts' Rollout resource instead of the deployment resource.

Argo Rollouts also supports more advanced "progressive delivery". Like a "Canary release," once a portion of the system has been released, metrics for the released system are analyzed.

Metrics are analyzed to determine whether a release has succeeded or failed. If it has failed, it is automatically rolled back.

Through this automatic rollback when a release fails, Argo Rollouts minimizes the effects of failure and reduces operating costs.

Argo Rollouts automates this part of the process, speeding up the release process and reducing deployment risk.

Metrics analysis and traffic management to support Argo Rollouts

The real value of Argo Rollouts lies in the analysis and rollback of metrics. Release and rollback are accomplished through traffic management. In this section, we will look at the features of Argo Rollouts with a focus on traffic management and metrics analysis.

- Leverage the traffic management capabilities of ServiceMesh and Ingress Controller to finely control traffic flow to new versions of Pod.

- If ServiceMesh or Ingress Controller is not deployed, Argo Rollouts manipulates the number of replicas in the ReplicaSet to control traffic flow.

- Integrate with monitoring tools such as "Prometheus" and "Datadog" to automatically determine whether to promote or roll back Pod depending on the values of acquired metrics.

Specifically, if you set a rule such as "If the percentage of 503 error in HTTP requests for 10 minutes exceeds 1%, roll back," the system will automatically block traffic to the new version of the Pod and only allow traffic to flow to the old version of the Pod.

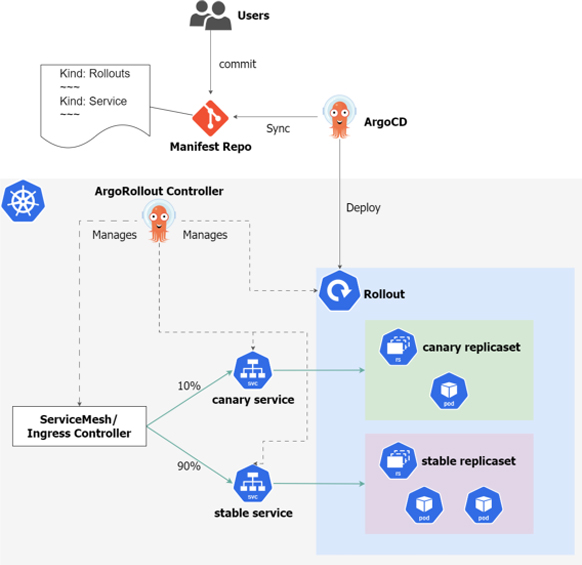

Argo CD and Argo Rollouts integration

The delivery tools Argo CD and Argo Rollouts are both products of the Argo project and are sometimes confused, so we will explain the differences and relationships between Argo CD and Argo Rollouts.

Argo Rollouts can be used alone but is often used in conjunction with Argo CD. In that sense, they are complementary tools, not competitors.

When used together, the resources defined in Argo Rollouts and other Kubernetes resources are managed together in a Git repository. When a change is made to a resource, it is deployed in the Argo CD. Argo Rollouts then manipulates the ReplicaSet for each new and old version when there are changes to the Rollout object, and changes traffic routing such as Ingress.

Relationship diagram between Argo CD and Argo Rollouts

If a rollback occurs during the Rollouts process, the new version is specified on the Argo Rollouts' manifest, but the working Pod is the old version. At this time, the Rollout resource appears to be in a "Degraded" state from the Argo CD health check.

Incidentally, Argo CD also has a rollback function, but it only rolls back to the state of a specific commit in the Git repository and does not provide detailed deployment control such as "Blue-green deploy" or "Canary release".

Typical deployment strategies

Now, before we move on to how to use Argo Rollouts, let's review the deployment strategy.

"Deployment strategy" refers to a variety of methods, and the options available vary greatly depending on the product environment. Developers must understand the characteristics of each method and be able to select the most appropriate strategy from the following perspectives, taking into consideration the nature of the application, the purpose of the release, and the operational conditions.

1. Deployment Speed and Stability

Deployment strategies have a significant impact on the speed and stability of application deployment. Selecting a specific deployment strategy can increase deployment speed and application stability.

2. Minimize Downtime

Deployment downtime can be minimized by choosing a specific deployment strategy. This will keep application uptime at a maximum.

3. Release Quality Control

Deployment strategies also have a great deal to do with release quality control. Selecting a specific deployment strategy will allow you to manage quality more effectively.

This article introduces three typical deployment strategies: Rolling updates, Blue-green deployment, and Canary release.

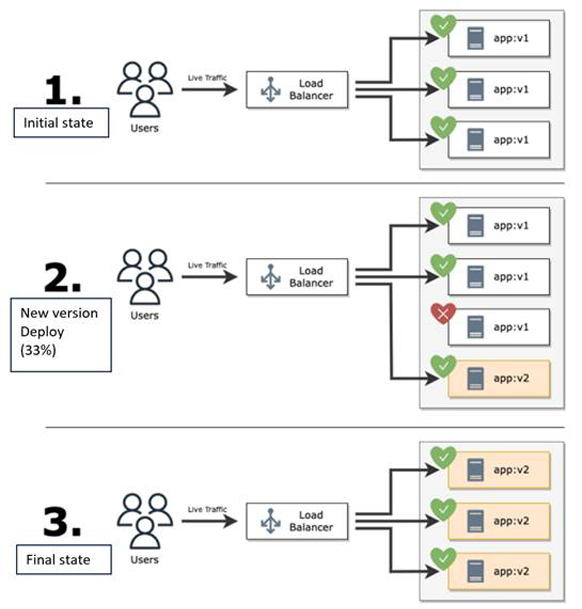

Rolling update

This is a method of gradually replacing an application with a new version as it is deployed. This allows for a smooth transition from the old version to the new version and minimizes application downtime.

Rolling update release flow

The update process is as follows:

- Traffic from users is always handled by the old version (v1)

- After creating a new instance of the new version (v2), attach it to the load balancer, and disconnect one of the old versions (v1) from the load balancer.

- Repeat the above process until all instances are replaced with the new version (v2), and all traffic from users is handled by the new version (v2).

Because of this method of making changes to the existing environment, it is time-consuming to switch back to the previous version in the event of a failure in the new version.

In addition, simultaneous updates of services with dependencies in multiple components are difficult to achieve because of the need to consider consistency between the new version and the previous version.

The Blue-green deploy, introduced next, solves these problems.

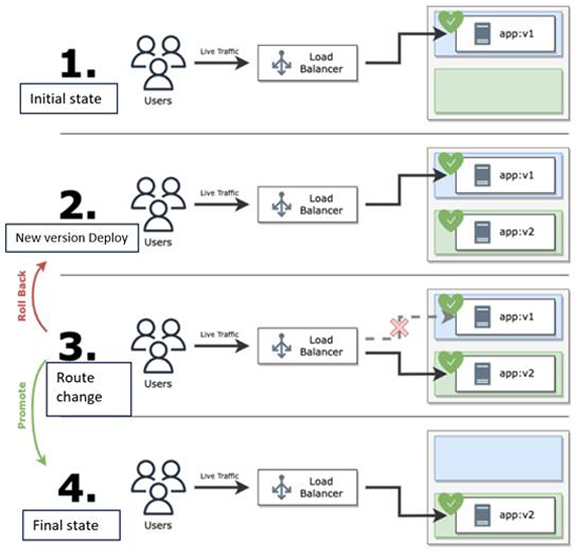

Blue-green deploy

This is a way to deploy a new version of an application in a different environment and switch traffic instantaneously.

The old version and the new version are completely separated, allowing for quick rollback in the event of a problem.

Blue-green deploy release flow

The update process is as follows:

- Traffic from users is always handled by the old version (v1).

- Create an instance of the new version (v2) with the same configuration as the old version (v1).

- By switching the load balancer destination to the new version (v2), all traffic from users is processed by the new version (v2).

→ If the new version (v2) is determined to have problems, switch the load balancer destination to the old version (v1) again to roll back instantly. - Delete the old version (v1) that is no longer needed.

This method does not make any changes to the existing environment, so if a problem should occur in the new environment, it can be rolled back to the existing environment immediately. On the other hand, because the existing environment and the new environment will be owned on two sides temporarily, costs will be incurred accordingly.

What "rolling update" and "Blue-green deployment" introduced so far have in common is that all users are at risk of being affected by the deployment process.

The "Canary release" introduced next eliminates these problems.

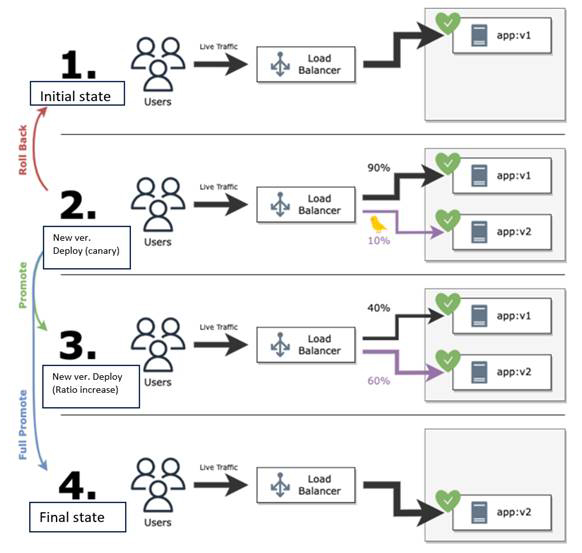

Canary release

This is a method of rolling out a new version of an application to a subset of users (canary) and then gradually rolling it out to the entire population. The quality of the new version of the application can be evaluated and rolled back quickly in case of problems.

Release flow of Canary Release

The update process is as follows:

- Traffic from users is always handled by the old version (v1)

- Use a portion of the traffic from users (10% in the above figure) as canary traffic, and direct only the canary traffic to the new version (v2) side of the load balancer.

- * If it is determined that there is a problem with the new version (v2), it is possible to roll back by switching the load balancer destination to the old version (v1) side again in the operation.

- Since it is determined that "there is no problem with canary traffic," the application ratio is increased in stages (60% in the above figure).

- * In parallel with increasing traffic to the new version (v2), instances of the new version (v2) are created, and instances of the old version (v1) are deleted.

- Repeat the above process until all instances are replaced with the new version (v2), and all traffic from users is handled by the new version (v2).

By adopting this method of making changes to the existing environment while limiting the scope of disclosure, the percentage of users affected by a failure in the new version can be controlled.

However, this strategy is not the "best strategy" to solve everything.

The burden on operational workers will inevitably increase in terms of whether the quality indicators obtained by the canary is appropriate and how to evaluate them.

As discussed at the beginning of this chapter, you need to consider what the best strategy is by considering the nature of the application, the purpose of the release, and the conditions of operation.

(Additional info.) Progressive delivery

As mentioned briefly at the beginning, this strategy is a relatively new method positioned as the next step after CD (Continuous Delivery) represented by the above three.

In CD, human judgment is required to determine the adequacy of the new environment, and manual rollback work is required in addition to the deployment work when problems occur. On the other hand, PD (Progressive Delivery) adds a new perspective of "analysis" to the deployment process, aiming to achieve both deployment speed through the CD pipeline and risk mitigation associated with deployment. A detailed explanation will be provided in the next article.

Common perspectives to consider in your deployment strategy

Although we have presented a variety of deployment strategies, there are many aspects to consider when choosing a zero-downtime deployment strategy. These perspectives are not limited to infrastructure layer issues, but also include application layer issues that need to be addressed as well. Below are some typical perspectives and examples of countermeasures.

Request -lost

Considering that the update process runs even while a request is being processed, the application must be stopped after all requests that are being held have been processed. Please make sure that your web server library or middleware supports "Graceful Shutdown".

Session disconnection

If sessions need to be handled in the same instance, sticky sessions must be configured on the load balancer side or session information must be stored separately from the application.

Downward compatibility

Unlike traditional closed releases, requests are constantly coming in during the release process, so it is necessary to ensure data compatibility between different versions of the application. Be especially careful not to omit DB data compatibility from consideration.

Practice deployment strategies with the Argo Rollouts sample

In this article, we provided an overview of Argo Rollouts and the deployment strategies supported by Argo Rollouts.

Temporary service outages upon system release are a thing of the past. Nowadays, engineers are faced with the challenge of how to reduce system downtime to near zero and how to minimize the impact on users when a defect occurs in the release of a new version, and you have seen various deployment strategies being proposed.

Original Article

This article is a translation and adaptation of an April 2023 ITmedia@IT article 「リリース時にサービス一時停止」は過去の話――Argo Rolloutsによる今どきなノンストップ「デプロイ戦略」:Cloud Nativeチートシート(26)- @IT (itmedia.co.jp)