Security Risks of Generative AI and Countermeasures, and Its Impact on Cybersecurity

Generative AI is being used by both individuals and companies. Here are some of the security risks of generative AI that are useful but can't be ignored, and what to look out for. We also look at how generative AI could change the world of cybersecurity.

Generative AI and Security

Since the appearance of ChatGPT, generative AI, often referred to as the modern industrial revolution, has attracted worldwide interest. However, new technologies come with new security risks and generative AI is not an exception. It is important to use the technology with a proper awareness of the risks it poses. Furthermore, because generative AI has a high degree of versatility, not only the security risks of generative AI itself but also the possibility that it may affect the entire cybersecurity field cannot be ignored.

The term "Generative AI" is sometimes used in various meanings, but this article is limited to interactive AI using a large-scale language model (LLM) represented by ChatGPT. Any other reference to generative AI (such as AI image generator) should be specified. Note that only risks specific to generative AI will be addressed here, and risks assumed in general information systems regardless of the use of generative AI, such as DoS attacks and supply chain attacks, will be omitted in this article.

Security Risks Users Should Be Aware of

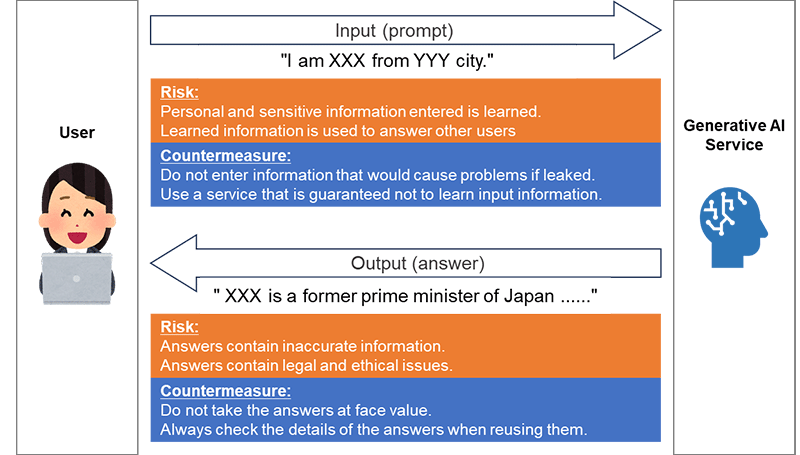

First, let us consider the security risks that users of services like ChatGPT should be aware of. Between the user and the generative AI, the user inputs an instruction called a "prompt," and the generative AI outputs the AI-calculated answer appropriate to the received instruction.

The two main risks assumed here are the leakage of input information and the output of inappropriate information. First, information leakage could occur if the information that a user inputs into the generative AI is used as learning material for the generative AI. The input information may be included in the output to other users resulting in an information leakage. The countermeasure is to not input information that should not be leaked, or use a service that does not use input information for learning and is guaranteed to handle input information appropriately.

The second risk is the output of inappropriate information. The generative AI may plausibly output untrue content, and this phenomenon is called hallucinations. It may also output legal and ethical issues, such as copyright infringement, discrimination, and bias. To reduce these inappropriate outputs, developers of generative AI are working to address them. However, it is currently difficult to eliminate them completely, and users must be aware of these risks in the output of generative AI and be careful not to take them at face value. In particular, when reusing the output, such as creating a report based on the output of generative AI, users should be responsible for checking its accuracy and compliance.

Figure 1 Security Risks from the Perspective of Users of Generative AI Services

Risks of Incorporating Generative AI into Services

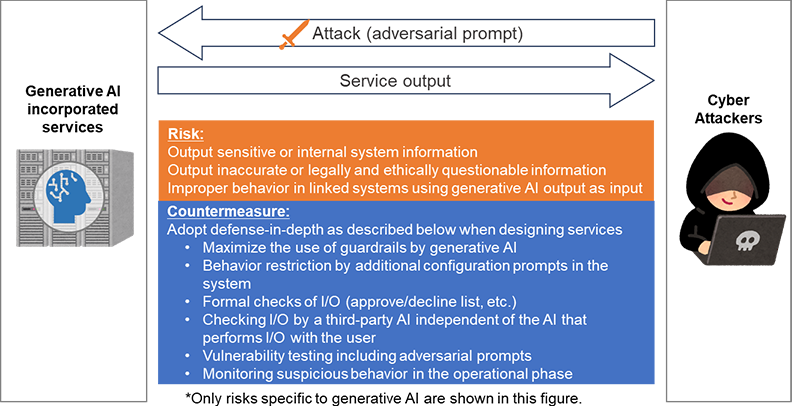

Next, we will consider the security risks from the perspective of a provider who provides service incorporating generative AI. A chat interface has been used as a temporary point of contact for users, and this would be a typical use case where generative AI is used. The risk assumed here is improper output for unexpected input from the user. We will look at input and output in detail.

Input can be targeted not only by ordinary users but also by malicious cyberattackers. Cyberattackers enter crafted prompts to intentionally generate inappropriate output that would not normally occur. These prompts are called adversarial prompts. Services that use generative AI to learn input from users should also consider the risk of improperly biased output after receiving a large amount of politically or ethically biased input.

Next is the output, which has the risk of causing three problems when an input containing an adversarial prompt is accepted.

- Information leakage by outputting information about other users or internal system information

- Responses that are inappropriate as a service such as factual errors, bias, discrimination, incitement of crime, or copyright infringement

- Triggering unintended behavior of a linked system when the output of a generative AI is used as input for the linked system

As a common countermeasure, in addition to the mechanism (guardrail) provided by the generative AI developers mentioned in the previous section to suppress inappropriate answers, it is necessary to consider the multilayered countermeasures listed below.

- Limiting behavior with additional prompts in the service (for example, not answering questions outside a specific area)

- Formal checks of I/O (for example, allowing only "Yes" or "No" output)

- Checking of I/O with an independent generative AI that is different from the generative AI that performs I/O with the user

- Vulnerability testing including implementation of adversarial prompts

- Monitoring for suspicious behavior of generative AI and linked systems during the operational phase

Since the degree of freedom of input/output decreases as countermeasures are tightened, it is necessary to determine what limits are imposed depending on the nature of the service. For services with low risk tolerance, it is necessary to fully consider whether generative AI should really be used.

Open Web Application Security Project (OWASP), an international application security organization, has published "OWASP Top 10 for LLM Applications" which describes the particularly serious security risks of LLM-enabled applications. The non-specific risks of generative AI, which have been omitted in this article, are also covered.

Figure 2 Security Risks from the Perspective of Providers of Generative AI-powered Services

Impact of Generative AI on Overall Cybersecurity

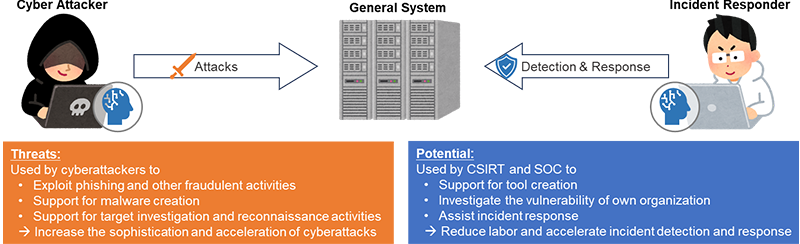

We have discussed the security risks and countermeasures of generative AI itself. In this section, we will move away from that and consider the impact of generative AI on overall cybersecurity. Compared to conventional software, generative AI has fewer hurdles making it easy to use, but it is also very versatile. Therefore, the development of generative AI will affect cybersecurity in various ways.

As far as we know at the time of writing, there have been no reports of completely new cyberattacks using generative AI, but there is no doubt that some cyberattackers are already using generative AI. We speculate that it is being used to improve the sophistication and efficiency of existing cyberattacks, as described below.

- Exploitation for fraud: email and fake news generation for phishing and business email compromise (BEC) purposes (not only the interactive AI targeted in this article but also the AI generators of image, audio, and video are effectively used.)

- Malware creation support: advanced malware is difficult to create with just the current generative AI, but skilled attackers exploit it as a programming support tool to increase creation efficiency

- Investigation and reconnaissance support: automation and efficiency of human decision making in pre-attack investigation and reconnaissance

While these antisocial uses should have been denied by the guardrails built into generative AI, attackers have refined adversarial prompts to circumvent the guardrails. Generative AI developers have tightened the limits, and now there's generative AI that are tuned for cyberattacks without any output limits, and sold to cyberattackers as a black business.

However, cyberattackers are not the only ones to benefit from generative AI. Computer security incident response teams (CSIRTs) and security operations centers (SOCs) will also need to actively use generative AI to combat cyberattacks. In the future, we may see fully automated defense systems stop fully automated cyberattacks. At this point, however, it is difficult to fully rely on generative AI to handle all of this, so for the time being, it is likely that the generative AI will be used to enhance productivity and supply the lack of human resources of the following:

- Tool creation support: Support for the creation of tools to streamline the daily operations of CSIRTs and SOCs, as well as incident investigation and response operations

- Vulnerability investigation: The flip side of attacker investigation and reconnaissance support is streamlining the investigation of locations (attack surfaces) that may be subject to attack by your organization

- Assistant: Assisting in incident investigation and response operations. Users need a certain level of skills to provide accurate instructions (prompts).

Figure 3 Threats and Potential of Generative AI for Cybersecurity

NTT DATA's Initiatives

At NTT DATA, security-related organizations are working together globally to investigate and verify the security of generative AI.

In particular, NTT DATA is making efforts to utilize generative AI for security operations. As the shortage of advanced security personnel becomes a global issue, the use of generative AI for security operations will become essential. Specific examples of these efforts include the automatic generation of investigation queries in threat hunting, the reduction of false positives in security incident detection, and the assistance of SOC analysts. An attempt is also made to improve accuracy by having the generative AI refer to past incident response information.

By accumulating the results of these efforts, NTT DATA aims to achieve a proactive response to incidents by using generative AI that aggregates NTT DATA's global security knowledge.

Teppei Sekine

He provides implementation and operational support for systems such as security information and event management (SIEM) and user and entity behavior analytics (UEBA) to a variety of clients. He is also engaged in security consulting including the development of security roadmaps and security risk assessments. Currently developing MDR services to be provided by NTT DATA globally.