Competitiveness and Governance in the Post-Generative AI Era

How Global AI Rules Are Redefining Offensive and Defensive AI Strategy

Artificial Intelligence (AI) has shifted from the margins to the core of business operations, with generative AI accelerating adoption across industries. Yet alongside this opportunity comes risks that demand robust governance. The EU, U.S., and Japan are pursuing distinct approaches, from strict regulation to voluntary guidelines. For companies, success now hinges not just on deploying AI but on governing it effectively. Those that balance innovation with accountability will gain trust, unlock long-term growth, and lead in the post-generative AI era.

- Index

-

- The Current State of AI Evolution and Corporate Utilization

- Business Innovation Driven by the Democratization of Technology

- Major Risks in AI Utilization and the Need for Control

- Global Trends in AI Regulation and Governance

- The Role of Management and Key Principles for Building an AI Governance Framework

- Essentials for Establishing Responsible AI Utilization

- Conclusion

Artificial Intelligence now spans a spectrum of capabilities that are reshaping how industries operate at every level. From predictive maintenance in manufacturing to supply-demand modeling and the automation of complex workflows, AI has moved from the periphery into the core of business operations. In fact, Microsoft is claiming that AI helped its organization save over $500 million (*1) in 2024 as part of broader gains in customer service, software engineering, sales productivity, and more.

Generative AI, in particular, has accelerated this shift. Its ability to produce dialogue, imagery, and code with near-human fluency has transformed it from an experimental technology into a practical tool deployed across sectors. Competitive advantage increasingly hinges not on whether an enterprise uses AI, but on the sophistication with which it integrates, scales, and governs these systems.

At the frontier, research into artificial general intelligence (AGI) is pushing further still. Unlike today's specialized systems, AGI aims to replicate human-level reasoning and transfer learning across domains, a leap that could redraw the boundaries of business, science, and society. Yet with greater capability comes bigger risks such as unreliable outputs, exploitable vulnerabilities, ethical blind spots, and mounting regulatory scrutiny.

The challenge for leaders is no longer whether to deploy AI, but how to govern it. Which risks can be tolerated, which must be constrained, and how can risk management itself become a source of value? Treated merely as overhead, governance stifles innovation; designed as a strategic framework, it becomes an asset - building resilience, reinforcing trust, and unlocking scale.

The next wave of competition will be decided not just by who builds AI most aggressively, but by who governs it most effectively. Offensive innovation and defensive maturity must advance in tandem. So what trends are we seeing in AI governance? And how can organisations strike a balance between caution and opportunity?

1. The Current State of AI Evolution and Corporate Utilization

The pace of AI's evolution in recent years has been nothing short of transformative. With the rise of generative AI and large language models (LLMs), systems are no longer confined to narrow tasks. They now generate natural dialogue, summarize and translate at scale, create images and audio, and even produce program code - capabilities once thought to be the exclusive domain of humans.

Some white-collar tasks that previously relied on human labor are now being streamlined and automated by AI, rapidly raising expectations for improved productivity and business transformation within companies.

A 2024 Japanese domestic survey found that while only 9.9% of companies had deployed generative AI in 2023, that number leapt to 25.8% just one year later (*2). Another survey showed that nearly nine out of ten companies using AI are already seeing measurable benefits (*3). Together, these findings mark a tipping point: AI has moved from pilot projects to full-scale deployment across industries.

Still, adoption is far from seamless. Accuracy fluctuations, algorithmic bias, and the risk of information leakage continue to hold companies back, especially in critical sectors such as healthcare and infrastructure, where errors carry severe societal consequences. The risks became tangible in 2023, when Samsung banned employee use of ChatGPT after an internal code leak raised concerns about sensitive data being exposed to public models (*4). Around the same time, Italy's data protection regulator temporarily blocked ChatGPT over GDPR violations, citing transparency and privacy concerns (*5). These instances showed just how quickly enthusiasm for generative AI could collide with legal, ethical, and security risks.

In response, organizations are beginning to recalibrate, seeking a balance between opportunity and control. By 2024, more than half of companies had already started to establish AI utilization policies and risk management frameworks, introducing clearer checkpoints and audit mechanisms to govern business applications (*3).

In this new phase, decision-making is less about "whether" to adopt AI, and more about portfolio strategy: which AI systems to deploy, under what governance structures, and with what safeguards in place.

2. Business Innovation Driven by the Democratization of Technology

The rise of AI has accelerated the democratization of technology. It has put powerful capabilities, once reserved for specialists, into the hands of a much broader workforce. What used to require advanced training or coding expertise is now accessible through conversational AI interfaces. Employees in sales, marketing, or planning roles can run data analyses or generate summaries in a matter of minutes. These were tasks that once demanded dedicated technical staff. The result is a wave of business innovation that cuts across departmental and role boundaries.

In practice, the impact is already visible. In software development, AI is automating code generation and testing. On the factory floor, it is spotting anomalies and enabling predictive maintenance. In the back office, it is streamlining white-collar processes through automation that goes far beyond traditional RPA. By lowering the technical barrier to entry, AI ensures more equitable access to knowledge and skills. This not only boosts individual productivity and sparks new ideas but also decentralizes AI use across the organization, making it easier for insights from the front lines to shape business strategy.

3. Major Risks in AI Utilization and the Need for Control

However, democratization comes with risks. Because powerful AI tools can be used without deep IT knowledge, the margin for error grows. Without proper guardrails, mistakes can scale just as quickly as successes. Put simply: if anyone can use AI, anyone can misuse it. That makes governance more crucial than ever.

Recognizing and managing these risks is the foundation for safe and sustainable adoption. Five of the most pressing challenges include:

- Hallucination

- AI systems can generate content that appears convincing but is factually incorrect, leading to poor decisions or the spread of misinformation. - Information Leakage

- Sensitive corporate or personal data may inadvertently be exposed if it is entered into AI tools without safeguards. - Prompt Injection

- Malicious prompts or hidden instructions can manipulate models into disclosing confidential information or behaving unpredictably. - Lack of User Literacy

- Employees who use AI without understanding its limitations risk misinterpreting outputs or overestimating accuracy. - Copyright and Privacy Infringement

- AI may reproduce copyrighted material or personal data without consent, raising legal and ethical concerns.

The severity of these risks grows with the scope of deployment. While an error in an individual productivity tool may be inconvenient, failures in enterprise systems or customer-facing services can trigger financial, reputational, or even regulatory crises.

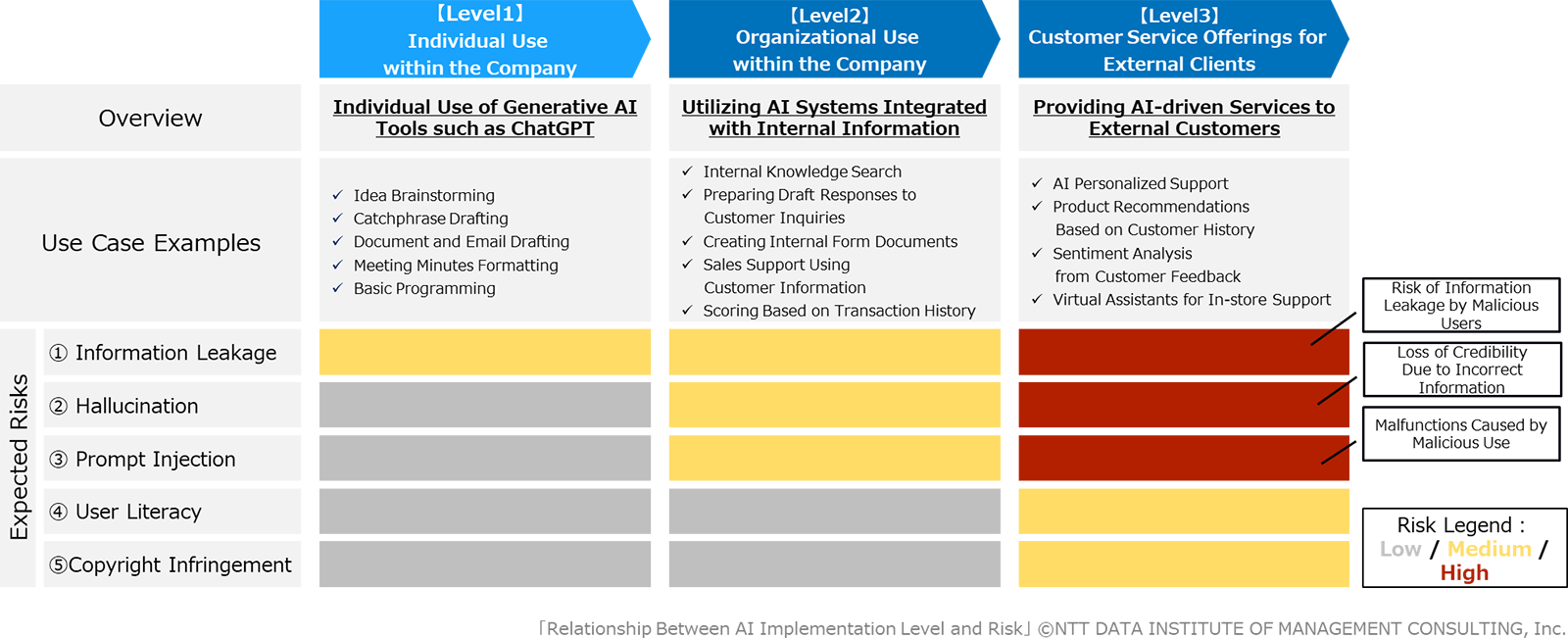

Figure 1: Relationship Between AI Implementation Level and Risk

As shown above, risk factors are diverse, but they can be mitigated through proper control. Yet the answer is not to halt adoption. Instead, companies must develop mechanisms to keep risks within acceptable bounds. Practical measures include human review of AI outputs, clear policies prohibiting the input of sensitive data, and a whitelist of vetted AI tools approved for internal use.

Governments and industry bodies are also working to standardize these practices. In the United States, for example, the AI Risk Management Framework (AI RMF) was developed through collaboration between public and private sectors, encouraging organizations to adopt voluntary but robust safeguards.

Ultimately, AI risk management is not a technical afterthought but a core management responsibility. Effective governance is the prerequisite for safe, secure, and value-driven AI utilization.

4. Global Trends in AI Regulation and Governance

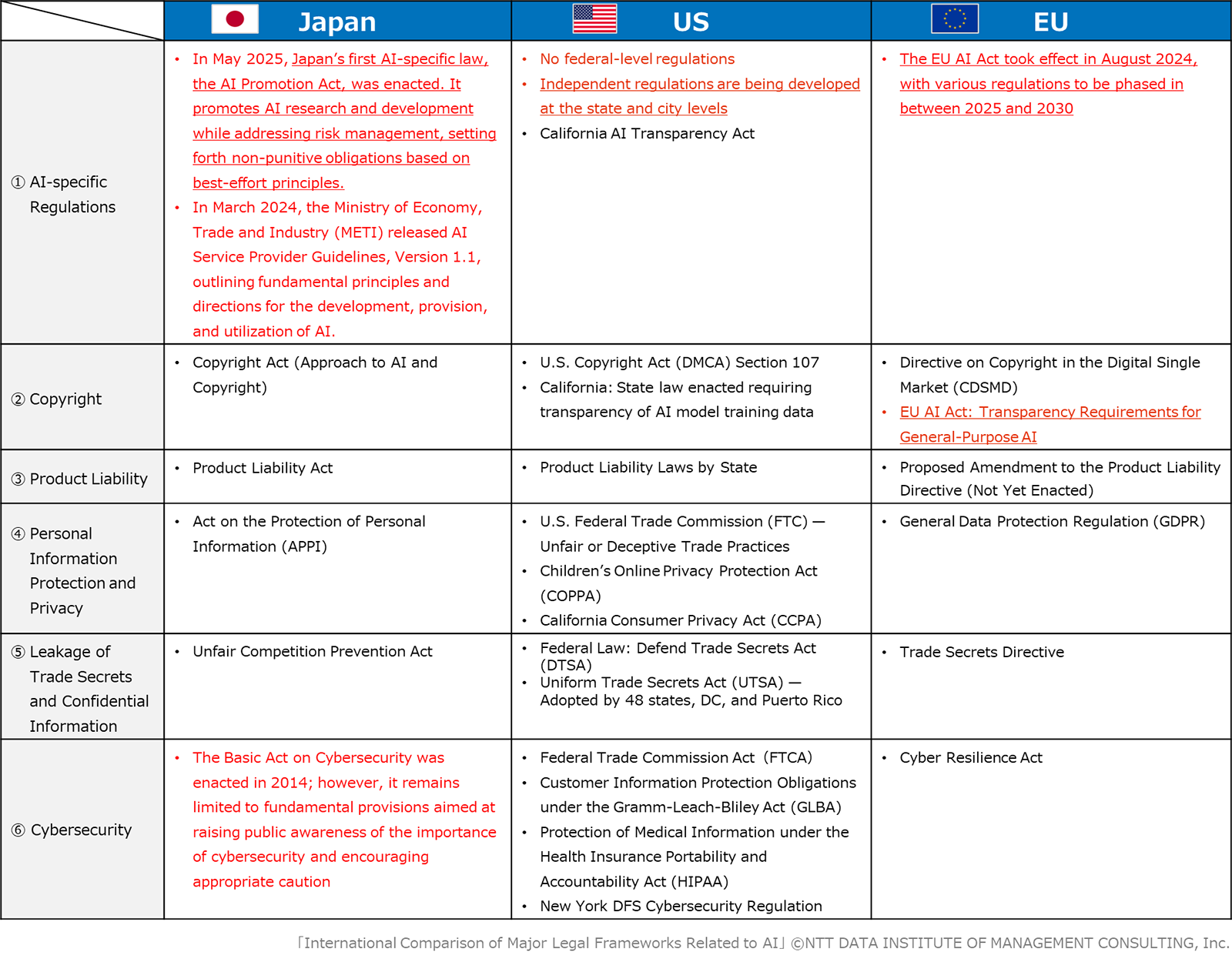

Figure 2: International Comparison of Major Legal Frameworks Related to AI

Across the globe, governments are racing to shape the rules of AI. Nowhere is this more evident than in Europe, where the AI Act, passed in 2024, introduces one of the most comprehensive regulatory frameworks to date. Beginning in 2025, it will be phased in across member states.

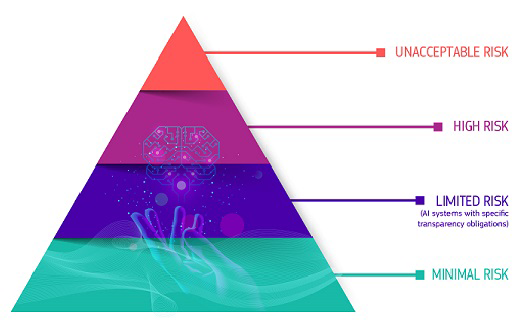

At the heart of the Act is a risk-based classification system (see Figure 3). AI applications are divided into four categories: unacceptable risk, high risk, limited risk, and minimal risk. Systems deemed to carry "unacceptable risk", for example, those that threaten human rights, are banned outright. High-risk systems face strict obligations around testing, transparency, and human oversight.

Figure 3: Four Risk Classifications of AI Systems in the AI Act (A Risk-Based Approach)

- Source: European Commission Website "Shaping Europe's Digital Future"

Generative AI is not left out. Providers must ensure outputs are clearly labelled as machine-generated, and disclose whether copyrighted works were used in training or results. The enforcement teeth are sharp: companies that fail to comply face fines of up to 7% of global turnover or €35 million (*6). Even businesses headquartered outside the EU fall under these rules if they sell AI systems into the European market.

The EU's approach sets a high bar, combining sweeping ambition with extraterritorial reach. For global companies, it represents a signal that AI governance will increasingly shape competitiveness.

U.S. AI Governance: A Flexible Mix of Executive Orders, State Laws, and Voluntary Standards

Unlike the EU, the United States does not have a single, comprehensive AI law. Instead, its approach has shifted with each administration. In October 2023, the Biden administration issued Executive Order 14110 on "Safe and Trustworthy AI Development and Use", a wide-ranging directive covering safety standards, privacy protections, fairness and civil rights, consumer and worker safeguards, federal use of AI, and international cooperation.

That framework was short-lived. When the Trump administration took office in January 2025, it repealed EO14110 and replaced it with a new executive order aimed at "removing barriers to American AI leadership." The focus shifted away from regulatory oversight and toward boosting economic competitiveness and national security. Measures such as mandatory red team (*7) result submissions and disclosure requirements for cloud providers were suspended, reflecting concerns about excessive corporate burden. This pivot marks a clear departure from the "safe and trustworthy" lens of the previous administration, leaving U.S. AI policy in a fluid state that warrants close monitoring.

At the federal level, no overarching law exists, but state legislation is beginning to fill the gap. California's upcoming AI Transparency Act, scheduled to take effect in 2026, requires companies to provide transparency in AI-enabled products. Alongside this, the AI Risk Management Framework (AI RMF) developed by NIST offers voluntary guidance for companies seeking to manage risks responsibly.

Taken together, these measures illustrate a U.S. strategy that prioritizes flexibility. By relying on executive orders, state initiatives, and voluntary standards, the U.S. aims to safeguard innovation while intervening selectively to address risks.

Japan's AI-Specific Law and Guidelines: From Soft Law to Effective Governance

This year, Japan stepped into the global regulatory arena with its first AI-specific law. On May 28, 2025, the House of Councillors passed the Act on the Promotion of Research, Development, and Utilization of Artificial Intelligence-Related Technologies (AI Promotion Act). The law seeks to strike a balance between two objectives: accelerating R&D and industrial adoption on one hand, and addressing misuse risks and public anxiety on the other. Its guiding vision is to establish Japan as "the world's most AI-friendly country."

What sets Japan apart is its light regulatory touch. The law does not impose binding penalties but instead places a "duty of effort" on businesses, research institutions, and citizens - encouraging cooperation to ensure AI safety and promote utilization. Rather than functioning as strict regulation, it provides a framework that supports innovation while encouraging voluntary governance.

To implement this framework, the government has created an AI Strategy Headquarters within the Cabinet. Through annual AI Basic Plans, this body will roll out concrete measures such as funding for R&D, human capital development, building data infrastructure, promoting transparency, and developing risk response guidelines. Companies will be expected to establish voluntary governance systems and enhance disclosure practices under this umbrella.

Still, Japan's approach is designed to evolve. Because the AI Promotion Act is a basic framework, revisions are expected as technology advances and risks emerge. Stronger guidelines, operational updates, and new enforcement mechanisms may be added in the future, requiring companies, investors, and regulators to remain adaptive.

Alongside the Act, the government has also advanced practical governance tools. Between 2024 and 2025, the Ministry of Economy, Trade and Industry (METI) and the Ministry of Internal Affairs and Communications released the AI Business Operator Guidelines (*8) (Version 1.1). These guidelines provide a comprehensive set of risk management practices for AI developers, providers, and users. Crucially, they adopt the principle of "agile governance" - a system that evolves continuously in line with technological and societal change. The guidelines also set clear expectations for the role of management and the design of corporate AI governance structures, giving companies actionable direction.

In essence, Japan's strategy positions itself between the EU's stringent risk-based regulation and the U.S.'s market-driven flexibility. Its model of "flexible and responsible promotion" underscores the importance of voluntary corporate governance while keeping space open for future regulatory strengthening. For businesses, this means AI strategies and governance frameworks must remain aligned with government priorities and nimble enough to adapt as the landscape shifts.

5. The Role of Management and Key Principles for Building an AI Governance Framework

Safe and effective AI use begins with leadership. In the era of democratized AI, and especially with generative AI, implementation has become a core management decision. Without a clear strategy from the top, uncertainty spreads through the organization. Teams hesitate, opportunities are lost, and productivity gains are left unrealized.

The responsibility of management is therefore twofold: to understand both the potential and risks of AI, and to define what level of risk is acceptable. This sets the foundation for clear usage policies and rules that must cascade through the organization. Policies might prohibit the input of sensitive information, establish review processes for AI-generated outputs, or define approved lists of tools. To embed these practices, some companies are already creating cross-functional units such as AI Governance Offices or Centers of Excellence (CoE) to provide continuous oversight.

However, governance should not be seen purely as defense. Management must also frame AI as an offensive strategy - a lever to enhance innovation, competitiveness, and growth. Building such a framework requires coordination across risk management, legal, security, and business strategy teams.

Three Practical Steps to Building an AI Governance Framework

- Risk Visualization and Prioritization

Conduct assessments early in implementation to identify likely risks, evaluate their potential impact, and rank priorities for action. - Design and Execution of Control Measures

Put in place safeguards such as "do not input highly confidential data into AI" or "require human oversight in critical decisions" (human-in-the-loop). - Ongoing Monitoring and Continuous Improvement

Establish a PDCA cycle (Plan-Do-Check-Act) to monitor AI usage, track incidents, and ensure compliance with internal policies.

Japan's AI Business Operator Guidelines reinforce the importance of management-led governance, ethical awareness, and AI literacy across the workforce. In practice, this means management must act simultaneously as the champion of AI adoption and the guardian of risk management, steering their companies with both offense and defense in mind.

6. Essentials for Establishing Responsible AI Utilization

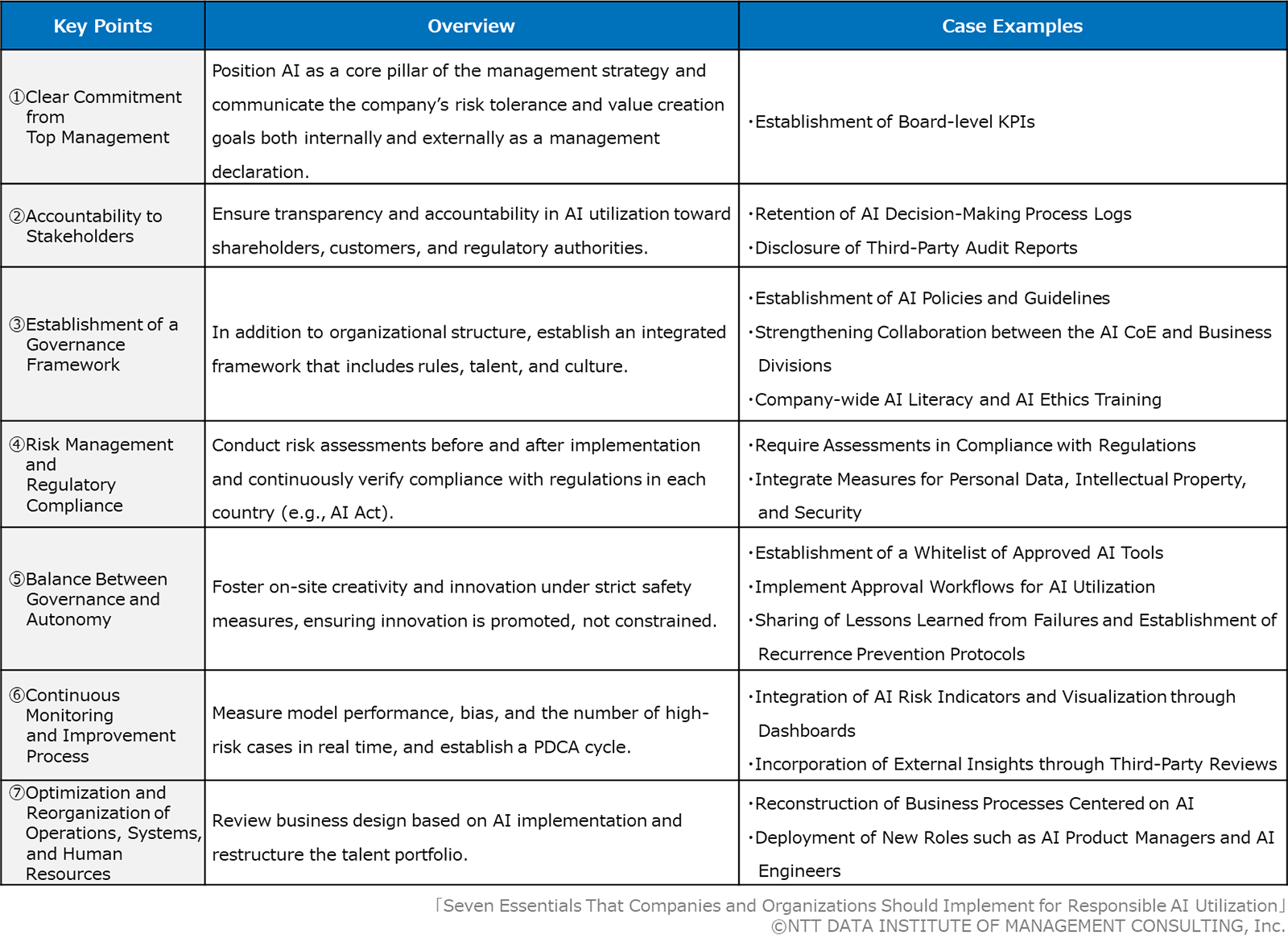

As AI becomes central to corporate competitiveness, responsible utilization grounded in ethics will determine which companies win trust from markets, regulators, and society. The following seven essentials (see Figure 4) provide a foundation for building that trust and ensuring sustainable growth.

Figure 4: Seven Essentials That Companies and Organizations Should Implement for Responsible AI Utilization

The companies that succeed will not be those that hold back out of fear, but those that move forward with governance and ethics as the backbone of their AI strategies. By investing in robust governance frameworks, organizations can capture medium- to long-term growth while minimizing exposure to major risks. In return, they strengthen their credibility with stakeholders across the market, society, and regulatory authorities.

When risks are managed effectively, AI becomes becomes a strategic weapon. In the post-generative AI era, the role of management is clear: to balance offense (innovation and competitiveness) with defense (risk control and accountability). Companies that achieve this balance will not only sharpen their competitive edge but also fulfil their broader social responsibility.

7. Conclusion

Global trends in AI governance highlight both the extraordinary potential of AI to drive productivity and the serious risks it carries in terms of security and ethics. For management, the task is not to treat these risks as constraints, but to harness them as catalysts - springboards for stronger governance and more confident adoption.

The pace of technological progress and regulatory change leaves no room for complacency. Now is the moment to reassess your company's AI governance framework and take decisive steps to strengthen it.

In the post-generative AI era, the real winners will be those organizations that adapt quickly, calibrate risk with clarity, and embrace bold innovation underpinned by trust. They are the companies that will set the standard for responsible leadership in the age of AI.

- (*1) Reuters: "Microsoft racks up over $500 million AI savings while slashing jobs - Bloomberg" (July 9, 2025)

- (*2) Yano Research Institute Ltd. Press Release: "Corporate Survey on the Actual Usage of Generative AI in Japan" (April 18, 2025)

- (*3) Teikoku Databank, Ltd.: "TDB Business View (Survey on the Utilization of Generative AI)" (August 1, 2024)

- (*4) TechCrunch: "Samsung bans use of generative AI tools like ChatGPT after April internal data leak" (May 2, 2023)

- (*5) Data Protection Report: "Italian Garante bans ChatGPT from processing personal data of Italian data subjects" (April 2023)

- (*6) Approximately JPY 5.75 billion (converted at 1 EUR = 163 JPY)

- (*7) A specialized team that intentionally performs adversarial inputs or manipulations on AI systems to verify whether the system returns inappropriate responses (e.g., harmful content, misinformation, bias, or information leakage).

- (*8) Ministry of Economy, Trade and Industry (METI) website, "Guidelines for AI Service Providers"

Toshio Komoto

NTT DATA Institute of Management Consulting Associate Partner, Business Transformation Unit Head of Cross-Creation Group Co-Representative, Social Innovation Alliance Japan/Denmark

Toshio joined NTT DATA Institute of Management Consulting after working at the Ministry of Internal Affairs and Communications.

A specialist in the "Re-Design" of society and industry tailored to the digital age.

He is an expert in mid- to long-term growth strategy planning, new business development, industry-government-academia collaboration, digital transformation (DX), and change management. Leads AI governance consulting.

Goa Sukegawa

Senior Manager, Business Transformation Unit, NTT DATA Institute of Management Consulting

Goa supports service creation across multiple areas including business development, engineering, digital transformation (DX) promotion, and security in the fields of information and communications, digital business, public administration, and mobility.

Specializes in solving challenges through value creation that integrates global business and engineering.

Yutaka Aoi

Senior Consultant, Business Transformation Unit, NTT DATA Institute of Management Consulting

Yutaka joined NTT DATA Institute of Management Consulting after working at the Ministry of Defense.

Specializes in strategy planning and new business development based on corporate strengths, and promotes business transformation through AI utilization and AI governance as two core pillars.

Also engages in the development of new themes through AI combined with advanced technologies, leading projects with significant social impact.

Yuta Nakao

Consultant, Business Transformation Unit, NTT DATA Institute of Management Consulting

Yuta joined NTT DATA Institute of Management Consulting as a new graduate.

Has extensive experience in a wide range of projects, including support for digital transformation (DX) promotion and business process reform for government agencies and major corporations, assistance in new business development for startups, and formulation of growth strategies for IT companies.